How to Measure Network Latency a Developer's Practical Guide

Learn how to measure network latency with this comprehensive guide. We cover essential tools like ping and traceroute and browser-based testing techniques.

Rekomenduojamos plėtiniai

Want to measure network latency? You can start with simple, built-in command-line tools like ping and traceroute to get a quick read on Round-Trip Time (RTT). Or, you can pop open your browser's developer tools to see how delays are affecting what your users actually experience.

These methods give you a fast, useful snapshot of how long it takes for a data packet to travel from a source, reach a destination, and make the trip back.

Why Measuring Latency Is Non-Negotiable

Before we get into the "how," let's talk about the "why." For developers and network engineers, latency isn't just a number on a screen; it's the invisible hand shaping the entire user experience. In today's applications, milliseconds are everything. Even a tiny delay can be the difference between a service that feels instant and one that feels broken.

Think about the real-world consequences:

- API Responsiveness: A single slow API call can create a domino effect, holding up everything from loading a user's profile to processing a critical payment.

- Real-Time Data Streams: For online gaming, live video, or financial trading, low and consistent latency is the absolute foundation. Without it, these applications simply don't work.

- User Retention: There's a direct line connecting slow-loading websites and apps to higher bounce rates and abandoned shopping carts. This stuff hits the bottom line, hard.

Distinguishing Key Latency Concepts

To measure network latency accurately, you have to know what you're looking at. The two most fundamental concepts are Round-Trip Time (RTT) and one-way latency.

RTT is the total time it takes for a signal to go from point A to point B and back again. It's the most common metric you'll see because it's straightforward to measure—you only need access to one end of the connection.

One-way latency, as the name suggests, measures the time it takes for data to travel in just a single direction. This is a much tricker measurement to get right because it requires perfectly synchronized clocks at both endpoints. However, it's a far more precise indicator for asymmetrical connections, where your upload and download paths behave very differently.

The importance of all this becomes crystal clear when you're doing serious load performance testing, which is where theory meets reality and bottlenecks are exposed.

To put some numbers on it, network monitoring experts generally classify latency like this:

- Low latency: Under 50 milliseconds

- Moderate latency: 50-150 ms

- High latency: Over 150 ms

From my experience, a quick test to a nearby server might show a perfectly acceptable 20-40 ms. But that number can easily balloon to over 200 ms for traffic that has to cross an ocean, which can be a game-changer for your application's performance.

To make sense of the jargon you'll encounter, here’s a quick reference.

Key Latency Concepts at a Glance

| Concept | What It Measures | Why It Matters |

|---|---|---|

| Latency (Ping) | The time it takes for a single data packet to travel from a source to a destination and back. Measured in milliseconds (ms). | This is the raw measure of delay. Low latency is crucial for real-time applications like gaming, VoIP, and video conferencing. |

| Round-Trip Time (RTT) | Essentially the same as latency, this is the total duration for a signal to be sent plus the time for an acknowledgment to be received. | RTT is the most common and practical way to measure latency from a single point, making it the go-to metric for tools like ping. |

| One-Way Latency | The time it takes for a packet to travel from source to destination in a single direction. | Provides a more granular view, especially for asymmetrical networks where upload and download paths have different latencies. |

| Jitter | The variation in latency over time. It measures the inconsistency of packet arrival times. | High jitter is just as bad as high latency for streaming media and online calls, causing stuttering, buffering, and glitches. |

| Bandwidth | The maximum amount of data that can be transmitted over a network connection in a given amount of time. Measured in Mbps or Gbps. | Often confused with speed, bandwidth is about capacity. You can have high bandwidth but still suffer from high latency. |

These concepts are the building blocks for understanding any network performance issue.

This is where having accessible, integrated tools becomes so important. Instead of running complex diagnostic suites, modern browser extensions and development tools can give you the insights you need without ever leaving your workflow. It's about making latency measurement an effortless, routine part of building and maintaining great software.

Getting Your Hands Dirty with Command-Line Latency Tools

To really get a feel for your network's performance, you've got to pop open the terminal. The command line is where you'll find the fundamental tools that give you raw, unfiltered data about your connection. It’s about seeing what’s really happening with the packets moving between you and a destination, and it's the essential first step for any developer serious about measuring latency.

The classic, go-to utility is ping. It's beautifully simple: it sends a tiny data packet (an ICMP echo request) to a server and just waits for it to come back. That simple round trip is the basis for calculating Round-Trip Time (RTT) and gives you an instant health check on a connection.

Your First Latency Check with Ping

Running a ping test couldn't be easier. Fire up your terminal or command prompt, type ping, and follow it with the domain you want to test.

By default, ping will keep going forever on macOS and Linux, while Windows sends just four packets and stops. For any real analysis, you'll want to control this. Sending ten or twenty packets gives you a much more reliable picture of the connection's stability than just a couple.

Once it's done, you'll get a neat summary with the crucial numbers:

- Packets Transmitted/Received: This tells you if any data was lost along the way. Even a small amount of packet loss is a major red flag for network trouble.

- Round-trip min/avg/max/mdev: These are your core latency stats. You get the best-case time (

min), the average (avg), and the worst-case (max). Themdev(mean deviation) is your measure of jitter—how much the latency varies from one packet to the next.

Pay close attention to the gap between your minimum and maximum RTT. If it's wide, your connection is unstable, even if the average looks okay. This jitter can be far more disruptive to real-time apps like video calls or gaming than a connection that's consistently a bit slow.

A common mistake is just glancing at the average RTT. An average of 50ms might seem fine, but if your minimum is 20ms and your maximum is 250ms, the user experience will feel choppy and unreliable. Always look at the full range to understand jitter.

Following the Trail with Traceroute and MTR

So, what do you do when ping reveals high latency or packet loss? Your next job is to figure out where the problem is. That’s what traceroute (or tracert on Windows) is for. It maps the entire path your packets take, showing you every single "hop"—each router—between your machine and the final destination.

Each line in the traceroute output is a hop, and it usually shows three separate latency measurements to that point. This lets you pinpoint if a specific router along the path is causing a major slowdown or dropping packets.

But traceroute is a one-and-done snapshot. For a more dynamic, continuous look, most network pros I know swear by MTR (My Traceroute). MTR is like a supercharged tool that combines ping and traceroute. It constantly sends packets to every hop on the route, giving you a live, updating view of latency and packet loss at every single point. This makes it incredibly effective at catching intermittent problems that a single traceroute would likely miss.

Why Your Choice of Tool Matters

The tool you pick and how you configure it can drastically change your results. This is especially true in ultra-fast, low-latency environments like cloud data centers.

It’s actually pretty eye-opening how different the numbers can be. In a detailed experiment run by Google Cloud, a standard ping test reported an average RTT of 146 microseconds. But when they used another tool that sends transactions back-to-back without a pause, the RTT dropped to just 66.59 microseconds—more than twice as fast!

This is a perfect example of why ping can sometimes overestimate latency. It shows that understanding how a tool works is critical for getting measurements you can trust.

Finding Your Connection's Top Speed with iperf

Latency isn't always the whole picture. Sometimes you need to know the maximum amount of data your connection can actually push through—its bandwidth. For that job, the tool you want is iperf.

While ping measures delay, iperf is all about throughput. It works by setting up a client-server connection and then blasting as much data as it can between them for a set amount of time.

To use iperf, you'll need two machines:

- On one machine, you run

iperfin server mode. It will just sit there and listen for a connection. - On the other machine, you run

iperfin client mode, pointing it at the server's address.

The client will connect and the test will start. The output tells you the total data transferred and, most importantly, the bitrate (your bandwidth) in megabits or gigabits per second. It’s the perfect way to stress-test a network link and find out what it’s truly capable of.

Measuring Latency from a User's Perspective

While command-line tools give you a raw, unfiltered look at your network, the only latency that truly matters for a web application is what the end-user actually experiences. This is where we shift our focus from the terminal to the browser itself. What happens inside the browser tells a much richer, more relevant story about performance.

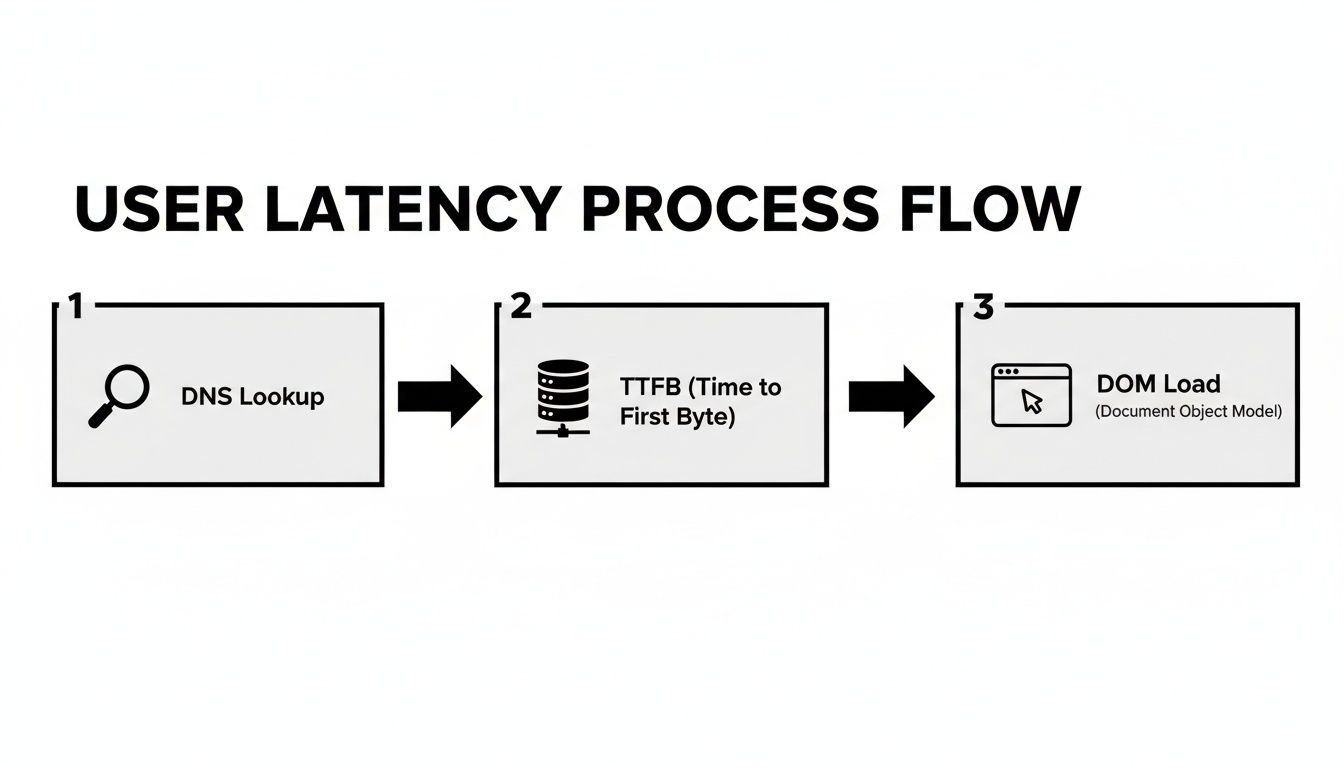

It's never just about a single packet's round trip. The latency a user feels is a complex cocktail of DNS lookups, TCP handshakes, TLS negotiations, server processing time, and of course, the time it takes to actually render the content on screen. Thankfully, modern browsers come packed with powerful built-in tools to help us dissect this entire process.

Diving into Browser Developer Tools

Every major browser—Chrome, Firefox, Edge, Safari—comes equipped with a suite of developer tools. The "Network" tab within these tools is your command center for understanding how your site loads. It lays everything out in a waterfall chart, which is a visual breakdown of every single request the browser makes to render a page.

This waterfall view is invaluable. You can see precisely how long each asset took to download, from the initial HTML document and CSS stylesheets to images and API calls. More importantly, it breaks down the lifecycle of each request into distinct phases:

- DNS Lookup: The time it takes to resolve a domain name to an IP address.

- Initial Connection: The time spent establishing a TCP connection with the server.

- SSL/TLS Handshake: The overhead required to set up a secure connection.

- Time to First Byte (TTFB): This is a huge one. It measures how long the browser waited before receiving the very first byte of data from the server.

- Content Download: The time spent actually downloading the resource itself.

A high TTFB, for instance, is a classic sign of a sluggish backend or server-side processing issue—something a simple ping test would never uncover. By analyzing this waterfall, you can quickly spot which resources are blocking rendering or just taking way too long to load.

A key takeaway from my experience is to not just look at the total load time but to hunt for the longest bars in the waterfall. A single unoptimized image or a slow third-party API can hold the entire page hostage, creating a poor user experience even if the rest of the site is lightning-fast.

Programmatic Measurement with Timing APIs

For more automated and precise measurements, you can tap into the browser's built-in JavaScript APIs. The Navigation Timing API and Resource Timing API give you programmatic access to the same detailed performance data you see in the developer tools. This is perfect for collecting real user monitoring (RUM) data to understand how your site performs for actual visitors across the globe.

You can grab these metrics with just a few lines of JavaScript, right in the browser console. To get the core performance timings for the main page load, for example, you can use performance.getEntriesByType('navigation'). This returns an object packed with valuable timestamps.

From that data, you can calculate vital metrics:

- DNS Lookup Time:

domainLookupEnd - domainLookupStart - TCP Handshake Time:

connectEnd - connectStart - Time to First Byte (TTFB):

responseStart - requestStart - Total Page Load Time:

loadEventEnd - startTime

This approach allows you to build custom dashboards or send performance data to your analytics tools, giving you a continuous pulse on your application's real-world performance. In web development, optimizing images is a common way to improve these metrics; for those interested, we have a helpful guide on choosing the best image format for your website.

Streamlining Checks with Integrated Tools

Jumping between the terminal, browser dev tools, and custom scripts can get old fast. This is where integrated browser extensions can really smooth out your workflow by unifying these checks. For instance, the ShiftShift Extensions suite includes a built-in Speed Test tool that you can pop open instantly from any tab.

This gives you a quick, privacy-focused way to measure your connection's download speed, upload speed, and latency without having to navigate to a separate website or open a terminal. Because it’s part of a larger toolkit, you can run a speed check, format a JSON response, and check a cookie all from the same unified command palette. This kind of integration makes performance checks a natural, friction-free part of the daily development grind.

How to Design a Latency Test That Actually Tells You Something

Anyone can fire off a ping command and get a number back. But if you want data you can actually trust—data that helps you make real decisions—you need to be more deliberate. A single, isolated measurement is just a snapshot in time. To truly understand your network's behavior, you have to think like a detective, considering where you test from, how often you test, and what you're really looking for.

A well-designed test turns raw numbers into actionable insights. A poorly designed one? It's just noise.

The diagram below breaks down all the little delays that add up to what a user feels when they load a webpage. It's a great reminder that a simple network ping doesn't even begin to tell the whole story.

As you can see, from the initial DNS lookup to the final render, multiple steps contribute to the total wait time.

Choosing Your Test Endpoints

The first rule of reliable testing is that geography matters. A test from your office in New York to a server down the road in New Jersey tells you absolutely nothing about the experience for your customers in Tokyo. To get a realistic picture, you have to test from diverse locations that actually mirror your user base.

Your list of endpoints should cover a few key areas:

- Your Biggest User Hubs: Where do most of your customers live? Test from there.

- Cross-Continental Paths: See what happens when data has to cross an ocean. Test between Europe and North America, or Asia and the US, to understand long-haul performance.

- Your Cloud Regions: If you're on AWS, Azure, or GCP, test connectivity to and between the specific data center regions you rely on.

Spreading your tests out like this creates a much more accurate map of global performance. It helps you spot region-specific bottlenecks that you’d otherwise miss completely. This is also a good moment to double-check your domain setup; you can find helpful tips on how to check domain availability and related configurations to ensure everything is in order.

Finding the Right Testing Rhythm

Network conditions are constantly in flux. They change throughout the day, the week, and even the minute. A test run at 3 AM on a Tuesday might look fantastic, but that result is useless if your peak traffic hits at 2 PM on a Friday when everyone is online.

To get a true baseline, you need to test consistently over time. Mix it up:

- Run tests during peak business hours.

- Schedule some for overnight maintenance windows.

- Don't forget the weekends, when traffic patterns can be completely different.

By sampling data repeatedly, you can smooth out the random spikes and dips. This is how you spot recurring problems, like the network getting congested every weekday afternoon right after lunch.

Don't Forget About Jitter

Average latency is a solid starting point, but it often hides a more sinister problem: jitter. Jitter is simply the variation in your latency over time. Think about it—a stable connection with a predictable 80ms delay is often way better for real-time apps than one that averages 50ms but bounces wildly between 10ms and 200ms.

Jitter is the silent killer of user experience for anything real-time, like VoIP calls, video conferences, or online gaming. High jitter is what causes choppy audio, frozen video, and frustrating lag spikes that make an application feel completely broken, even when the average latency looks good on paper.

Understanding jitter means looking beyond the average. It’s the unsung villain because it reveals why averages alone can be so misleading. For example, data from Pandora FMS shows that jitter over 30ms can jack up packet loss rates in gaming to 15%—enough to make a game unplayable. Measuring the standard deviation of your latency results is the first step to putting a number on that instability.

Latency Test Design Checklist

To pull all this together, here’s a quick checklist to guide you. Following these steps will help ensure the data you collect is both accurate and genuinely useful.

| Checklist Item | Why It's Important | Actionable Tip |

|---|---|---|

| Define Clear Goals | You can't measure what you don't define. Are you troubleshooting a specific issue or establishing a baseline? | Write down your objective before you start. "Diagnose lag for users in Southeast Asia" is a better goal than "check latency." |

| Select Diverse Endpoints | A single path doesn't represent your global user experience. | Pick 3-5 locations: one local, one on another continent, and a few in your key user markets. |

| Establish a Cadence | One-off tests miss time-based patterns like peak-hour congestion. | Schedule tests to run automatically every hour for a week to capture a full cycle of network behavior. |

| Measure Jitter | Averages hide the erratic performance that ruins real-time applications. | Don't just look at the average RTT. Calculate the standard deviation or use a tool like mtr that shows min/max/avg latency. |

| Use the Right Tools | ping is good for a quick check, but tools like mtr or iperf provide deeper insights. |

For web performance, use browser dev tools. For raw network paths, mtr is a great choice. |

| Document Everything | You'll forget the "why" behind your test six months from now. | Keep a simple log: date, time, endpoints, tool used, and a brief note on what you observed. |

By being methodical, you move from simply measuring latency to truly understanding it. This thoughtful approach is what separates a random number from a reliable performance indicator.

Making Sense of the Numbers (and What to Avoid)

Alright, you've run your tests and have a pile of data. This is where the real work begins—translating those raw numbers into something that actually means something. The data is telling you a story about your network's health; you just need to learn how to read it.

For example, a sudden spike in Round-Trip Time (RTT) on a traceroute is a classic clue. If latency jumps at hop number three and stays high all the way to the end, you've likely found your problem: it’s that third router or the link right after it. But be careful. If only that single hop shows high latency and the final destination is still quick, it might just be a router configured to de-prioritize the exact kind of traffic your test uses. It's a common false alarm that can send you down a rabbit hole.

Decoding Jitter and Packet Loss

Looking past simple RTT is where you'll find the most critical insights. High jitter, which is just a fancy word for inconsistent latency, can be far more disruptive than latency that's consistently high. This is especially true for anything real-time.

If your results show an average RTT of 40ms, but the minimum was 10ms and the maximum was 150ms, your connection is unstable. That massive variance is exactly what causes annoying stutters in video calls and rage-inducing lag spikes in online games.

Packet loss is an even bigger red flag. Even 1% packet loss can absolutely cripple TCP-based applications, forcing them to constantly resend data and slowing everything to a crawl. When you look at your test results, any real difference between packets sent and packets received needs to be investigated immediately.

One of the biggest mistakes I see people make is assuming a single test tells the whole story. Network conditions are constantly changing. A test run at 3 AM will look completely different from one at 3 PM during peak business hours. The only way to get a true performance baseline is through consistent, repeated testing.

To get ahead of problems, it's worth looking into dedicated tools for network performance monitoring. This shifts your approach from frantically fixing things when they break to proactively keeping your network healthy.

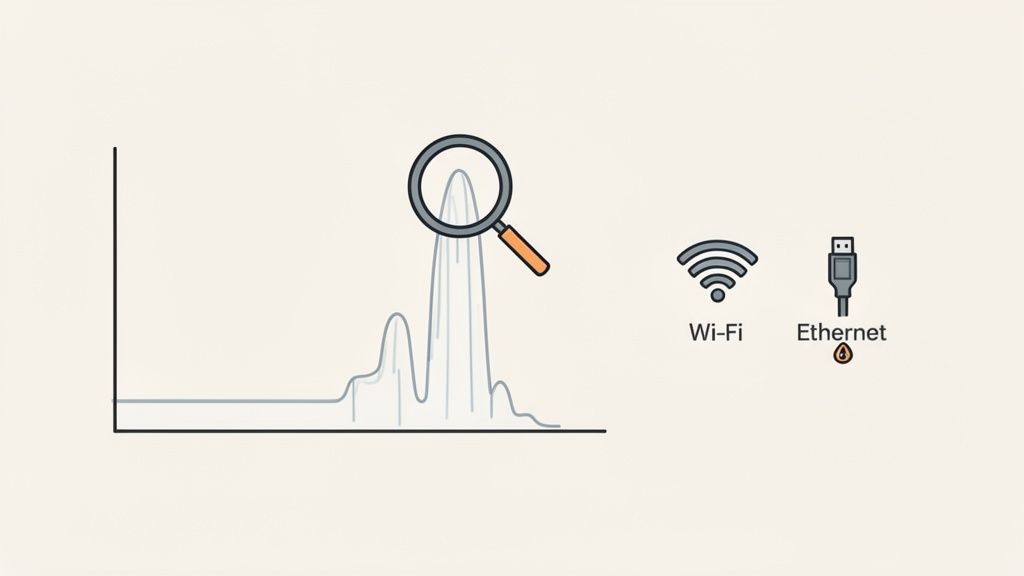

The Most Common Measurement Mistakes

Even with the best tools in the world, a few simple mistakes can render your results completely useless. Avoiding these common pitfalls is non-negotiable if you want data you can actually trust.

- Testing Over Wi-Fi: Seriously, just don't. Wireless connections are notoriously fickle, prone to interference from everything from microwaves to your neighbor's router. For any serious latency testing, plug in with an Ethernet cable. It’s the only way to get a stable, reliable baseline.

- Forgetting VPN Overhead: VPNs are great for security, but they add an extra stop and encryption to your traffic's journey. This will always increase latency. If you're trying to diagnose a user's slow connection, one of your first questions should be, "Are you on the VPN?" Testing with and without it will show you exactly how much delay it's adding.

- Ignoring Local Network Congestion: Your test results will be skewed if someone else on your network is hogging all the bandwidth. If a colleague is streaming 4K video or downloading massive files while you're testing, your latency numbers will be inflated, and you'll end up chasing a problem that doesn't exist.

Another subtle but critical factor is the tool you choose. As we've covered, different utilities measure latency in different ways. Always be consistent with the tools you use for comparison, and make sure you understand what each one is actually measuring—whether it's a simple ICMP echo or a complex, application-level request. And remember, performance can be affected by many layers; for instance, if you're digging into web performance, our guide on a Cookie Editor Chrome Extension can show how client-side elements play a role.

By interpreting your results with the right context and steering clear of these common mistakes, you'll move beyond just collecting numbers. You'll start to understand the why behind your network's performance, and that’s the key to building faster, more reliable systems.

Common Questions About Network Latency

Even with the right tools, a few common questions always seem to surface when you start digging into network latency. Let's walk through some of the most frequent ones I hear to help you make sense of your results.

What’s Actually a “Good” Latency Number?

This is the classic "it depends" question, but we can definitely set some solid benchmarks. A "good" latency is completely relative to what you’re trying to accomplish.

- Casual Web Browsing: For most of us, anything under 100ms RTT will feel perfectly fine. Pages load quickly, and you won't notice any real lag.

- Competitive Online Gaming: This is where every millisecond counts. Serious gamers and high-frequency traders are looking for latency well below 20ms. It's the difference between winning and losing.

- Video Calls & VoIP: Here, consistency is king. You need a stable latency under 150ms and low jitter (less than 30ms) to avoid that choppy, out-of-sync feeling or, worse, dropped calls.

As a rule of thumb, most network pros I know would classify anything under 50ms as low latency. From 50-150ms is moderate, and once you creep over 150ms, you'll start to feel the drag on most interactive applications.

Why Do My Ping and Browser Speed Test Results Never Match?

This is a fantastic question and a super common point of confusion. It happens because a command-line ping and a browser-based speed test are fundamentally different tools measuring different things.

For starters, they're almost certainly talking to different servers. When you ping a domain, you're hitting a specific target. A web speed test, on the other hand, is designed to find a geographically close server from its own network to give you the best-case-scenario result.

The protocols are also completely different. Ping uses a very lightweight protocol called ICMP. Most browser tests run over TCP, which requires a whole setup process (the "three-way handshake") just to establish a connection. That initial back-and-forth adds a bit of time before the real test even begins.

Finally, browser tests often bake in more than just pure network travel time. Their "latency" number might include server processing time or even small delays within your browser itself, which can inflate the final figure compared to a raw ICMP ping.

How Can I Actually Lower My Network Latency?

Reducing latency is all about hunting down and eliminating bottlenecks, whether they're in your office or across the internet.

The first place to look is your immediate environment. The single most effective change you can make is switching from Wi-Fi to a wired Ethernet connection. It’s a game-changer for stability and speed. If you have to use Wi-Fi, get closer to your router and hop on the 5GHz band if you can—it’s usually less crowded.

Looking beyond your local network, sometimes a DNS swap can help. Using a faster DNS server can shave off milliseconds from the initial connection time when you look up a website.

If you’re trying to improve access to a service you control, a Content Delivery Network (CDN) is the answer. It works by placing copies of your content physically closer to your users. And if you're using a VPN, try turning it off. That extra hop and encryption layer almost always adds latency.

I’ve seen corporate VPNs add as much as 70ms to a round-trip time. It can turn a great connection into a frustratingly slow one. Always test with and without your VPN to see what kind of performance hit you’re actually taking.

What's the Real Difference Between Latency and Bandwidth?

Getting this right is fundamental to understanding network performance. It’s easy to mix them up, but they measure two very different things.

Here's the analogy I always use: think of it like a highway.

- Bandwidth is how many lanes the highway has. More lanes mean more cars (data) can travel at the same time.

- Latency is the speed limit. It dictates how fast a single car (a packet of data) can get from A to B.

You could have a massive, ten-lane highway (huge bandwidth) with a 20 mph speed limit (high latency). You could move a ton of data eventually, but real-time things like a video call would be painfully slow. On the flip side, a connection with very low latency feels incredibly snappy and responsive, even if its bandwidth isn't enormous. You really need a good balance of both for a great experience.

Ready to make performance testing a seamless part of your daily workflow? The ShiftShift Extensions suite puts a powerful Speed Test, JSON formatter, and dozens of other developer tools right inside your browser, accessible with a single command. Stop juggling tabs and start working smarter. Download ShiftShift Extensions for free and supercharge your productivity today.